YouTube relies on its algorithms to do many things, including taking down, censoring, and restricting content. But if the algorithm enables pedophiles, then it is also terrifyingly dangerous..

YouTube relies on its algorithms to do many things, including taking down, censoring, and restricting content. But if the algorithm enables pedophiles, then it is also terrifyingly dangerous..

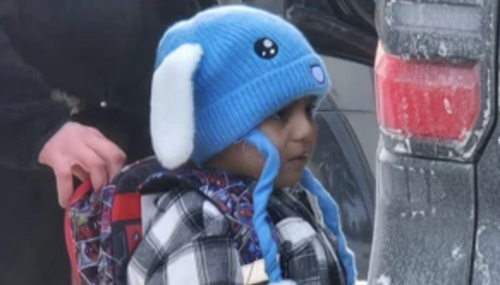

According to a report released by The New York Times, YouTube algorithms auto-created playlists of partially clothed children that were then exploited by pedophiles. These suggestions would come up whenever users would search sexual terms or watched sexually themed content. YouTube had curated playlists of partially clothed children, young prepubescent teens in bathing suits.

Researchers described a journey from recommendations on videos that were sexually themed that led to more recommendations of women discussing sex, showing body parts, and progressively getting younger and younger. In some of these video descriptions, the users “solicited donations” with hints of private, more intimate videos.

As the subjects of the videos became younger, the more obvious sexual descriptions would disappear. Some of the videos of children in bathing suits were family videos, uploaded by parents, made with innocent intent. Other videos were from Eastern European countries, uploaded by fake accounts. Instagram accounts for the young children were often linked to the videos, allowing people to find out more about the children’s identity.

Home videos uploaded by parents were receiving inordinate amounts of traffic if they were videos of children in bathing suits.

YouTube claimed routine tweaks to its algorithm caused the auto-lists to appear, and coincidentally, caused the auto-lists to vanish. But The Times argues that the one thing that would ultimately resolve the issue--removing videos with children in them from the recommendations--hasn’t been done.

The journalist who wrote the story, Max Fisher, tweeted that researching this story “made me physically ill and I've been having regular nightmares.”

The company has struggled with its pedophile problem in the past. In February, 400 channels were deleted as part of child exploitation rings.