Twitter announced on Tuesday it will institute a new policy to crackdown on how visible allegedly bad tweets are on the site. Users who are believed to be engaging in “troll-like” behavior will be corralled into a “[s]how more replies” section while users deemed to be contributing to “healthy” conversations will be unaffected.

In a statement, Twitter’s VP of Trust and Safety Del Harvey and Twitter’s Director of Product Management, Health David Gasca wrote about the problem of addressing so-called “trolls” on the site. According to the statement, while some trolling can be good-natured, others “distort and detract from the public conversation on Twitter, particularly in communal areas like conversations and search.”

CEO Jack Dorsey (pictured here) said the “ultimate goal is to encourage more free and open conversation.” He said Twitter will be “[l]ooking at behavior, not content” in order to reduce trolling. He vowed to discuss the issue more in-depth on Periscope.

In his tweet, Dorsey shared a BuzzFeed article with the headline, “Twitter Is Going To Limit The Visibility Of Tweets From People Behaving Badly.” The subhead visible on Twitter read, “Act like a jerk, and Twitter will start limiting how often your tweets show up.”

The statement also claims that less than 1 percent of Twitter users are the majority of accounts that are reported to the site, but most of their content does not violate the rules. Therefore, in order to limit people’s interaction with those accounts, Twitter is implementing algorithms behind the scenes. Project Veritas released undercover videos in early January had raised the issue of “shadow banning” with a hidden-camera interview of a former Twitter engineer.

As the statement explains, the site will use “many new signals” that are “not visible externally” to rank tweets and hide from view tweets from users who are seemingly trolls:

There are many new signals we’re taking in, most of which are not visible externally. Just a few examples include if an account has not confirmed their email address, if the same person signs up for multiple accounts simultaneously, accounts that repeatedly Tweet and mention accounts that don’t follow them, or behavior that might indicate a coordinated attack. We’re also looking at how accounts are connected to those that violate our rules and how they interact with each other.

In order to see tweets from offending users, you will have to manually click the “[s]how more replies” feature and opt to see everything in your feed in your settings.

Speaking to Slate, Harvey elaborated that the site will allegedly decide who is negatively impacting a conversation by surveying how many complaints a user receives and if they are blocked or muted.

When questions about whether the policy will impact bots or real people, Harvey told Slate it will impact “accounts that are having the maximum negative impact on the conversation.”

Alarmingly, Harvey confirmed to Slate that the site will not allow users to know whether their accounts have been impacted by this new policy, leading them to apparently tweet into a void.

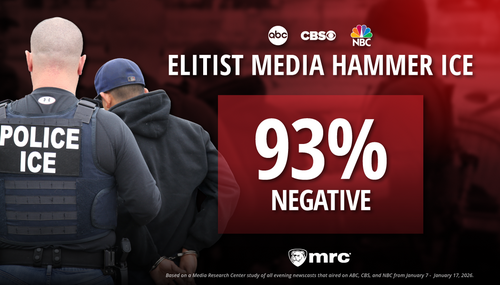

This method raises questions about who will be considered a troll. Considering Project Veritas videos showed Twitter employees discussing how to keep conservative personalities out of people’s feeds, and the Media Research Center’s “Censored!” report noted that 12 out of the 25 American Trust and Safety Council members are liberal organizations, the definition of a troll seems very subjective and ripe for the same kind of abuse it allegedly tries to stop.